Word Count

Writers Talk About Writing

When Failure Is an Option

Although we can take inspiration from stories where "failure is not an option," engineers think a lot about situations in which it very much is an option. Certainly in the world of computers, failure is never far away, and consequently there is some interesting vocabulary around anticipating and managing that failure.

We can start with a term we all know: 404, which is the error code that web servers are supposed to return if you request a web page that doesn't exist. There's an entire series of such codes; any code in the 400 or 500 range is for a failure condition, including 403 (forbidden) and the dreaded 500 (internal server error). But 404 is the code we all know best, to the extent that it's made its way into the dictionary as slang for "a person who is stupid or uninformed."

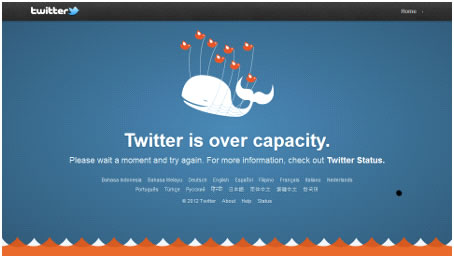

Websites are free to implement a 404 error page any way they like, and there are some brilliant ones, including one that lets you play Space Invaders. In fact, some websites have turned failure conditions into whimsy; Twitter reports errors using their famous Fail Whale; Google has their Broken Robot; Tumblr has Tumbeasts; and there are more such fail pets.

Twitter's Fail Whale, one of several "fail pets" (source)

If you've shopped for a computer hard disk recently, you might have run across RAID disks, which are a "redundant array of independent disks." A hard disk is a component much prone to failure, so there are various strategies for mitigating the inevitable. The "R" in RAID is a term you encounter frequently in discussions of failure: redundancy, i.e., maintaining data on multiple disks. For example, the data might be mirrored (duplicated) across multiple disks. It might also be striped, where the data is stored in "stripes" across multiple disks.

If you've got redundant components, whether they're disks or processors or something else, you also want to be able to move from a failed component to one that's still working. This is known by the term failover, which can function as a verb ("we started failing over servers"), adjective ("failover cluster"), and a noun ("the system experienced numerous failovers"). If the redundant system runs concurrently with the primary system, it's said to be on hot standby and instantly ready for a failover. A warm standby can be brought online on short notice, and a cold standby is roughly equivalent to restoring a system from an offline backup.

Programmers have to anticipate failure conditions in their programs. For example, what if you try to save a document to disk, but the disk is full? One way is by adding exception handling to a program, in which the program attempts to anticipate exceptional circumstances—known unknowns, so to speak.

The exception doesn't always have to result in an actual error. For example, I recently learned of a strategy for handling the exception that occurs when a website doesn't respond to a request within a specified period—i.e., it times out. Because this can happen due to "transient conditions" and not due to hard failure, it's often helpful to simply retry the operation. But you don't want to just keep hammering the website with new requests, which might then indeed cause a failure. Programs therefore often implement the wonderfully named exponential backoff—they wait a progressively longer (doubled) interval each time before retrying the operation, such as 1 second, then 2 seconds, then 4 seconds, until they either have success or decide that the operation really is a failure. I love this term so much that I keep trying to find ways to work it into everyday conversation.

The general term for all of these strategies is fault tolerance. However, faults still occur. Therefore, engineers often design systems so that if they are going to fail, they do so as soon as practical, a strategy known as fail-fast. Better for a component to fail while it's still on the ground rather than, say, on final approach to Mars. The idea of "fail fast" has been generalized into less engineering-oriented contexts; for example, it's seeped into business talk, where entrepreneurs are encouraged to "fail fast , fail often, and fail by design." I recently gave a talk about writing documentation, and I encouraged the audience to "fail fast"—that is, tell readers right away whether the page they'd landed on contained the information they were seeking.

And of course engineers court failure as a way to test their systems. One way to look at the discipline of software testing is that it essentially consists of finding ways to break software. The engineers at Netflix took, well, an engineering approach to this. To test the robustness of their system, they wrote a program with the excellent name Chaos Monkey, which works like "unleashing a wild monkey with a weapon in your data center to randomly shoot down instances and chew through cables." The name has started to catch on; for example, an article in Forbes last year used Chaos Monkey in the generic sense of an agent who runs amok in your system in order to test it. Perhaps if Shakespeare had been a software tester, his motto would have been to "Cry 'havoc' and let slip the chaos monkeys."